peleke-1

Learning the Language of Antibodies

We’re proud to introduce peleke-1, a series of fine-tuned protein language models that generate targeted antibody sequences. Simply give it an antigen sequence.

(Oh, and the models, code, and data are fully open-source and flexibly licensed.)

Our Latest Models.

-

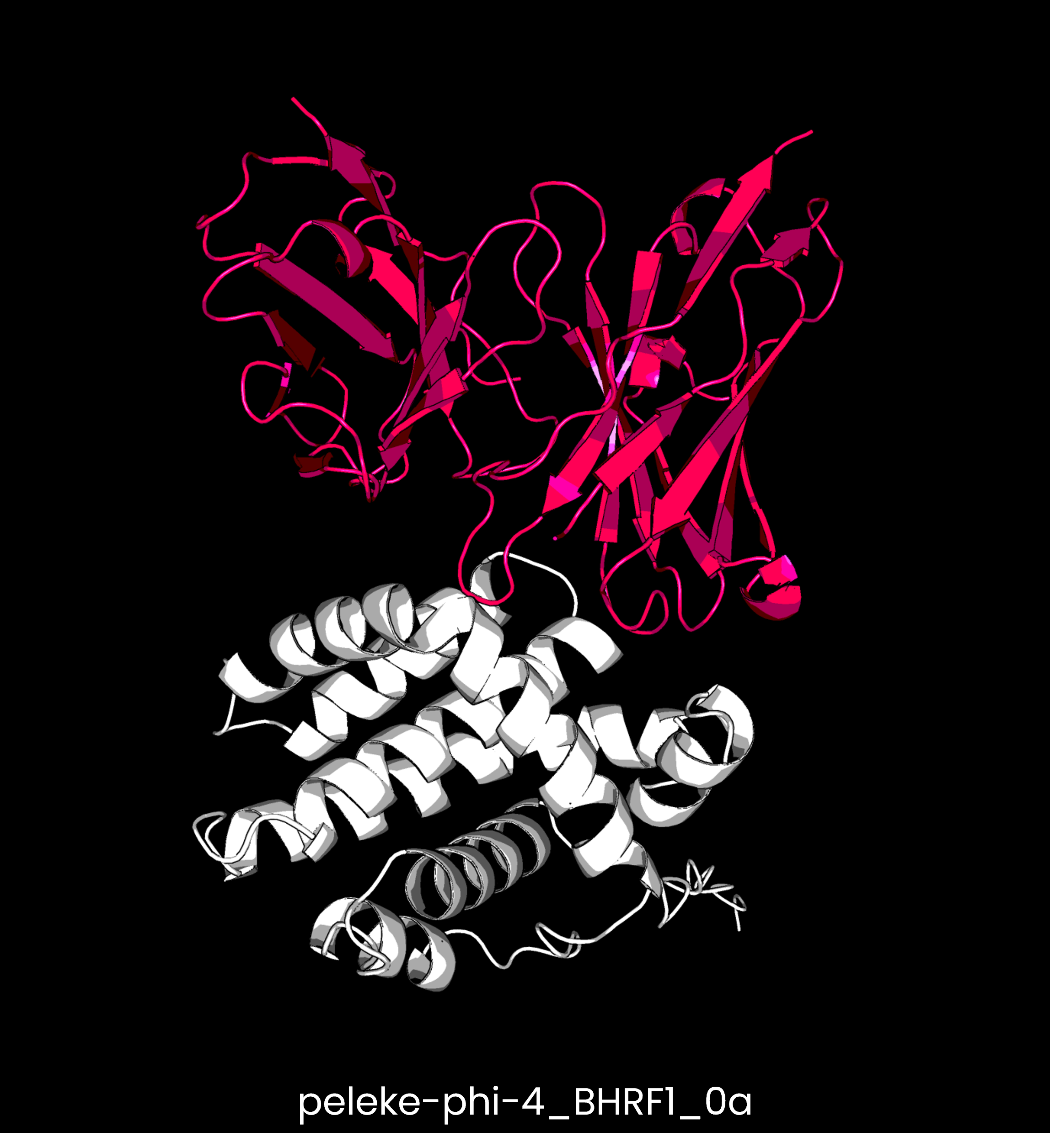

Based on the 14B parameter model from Microsoft, this model handles large antigen inputs for a relatively small model size.

-

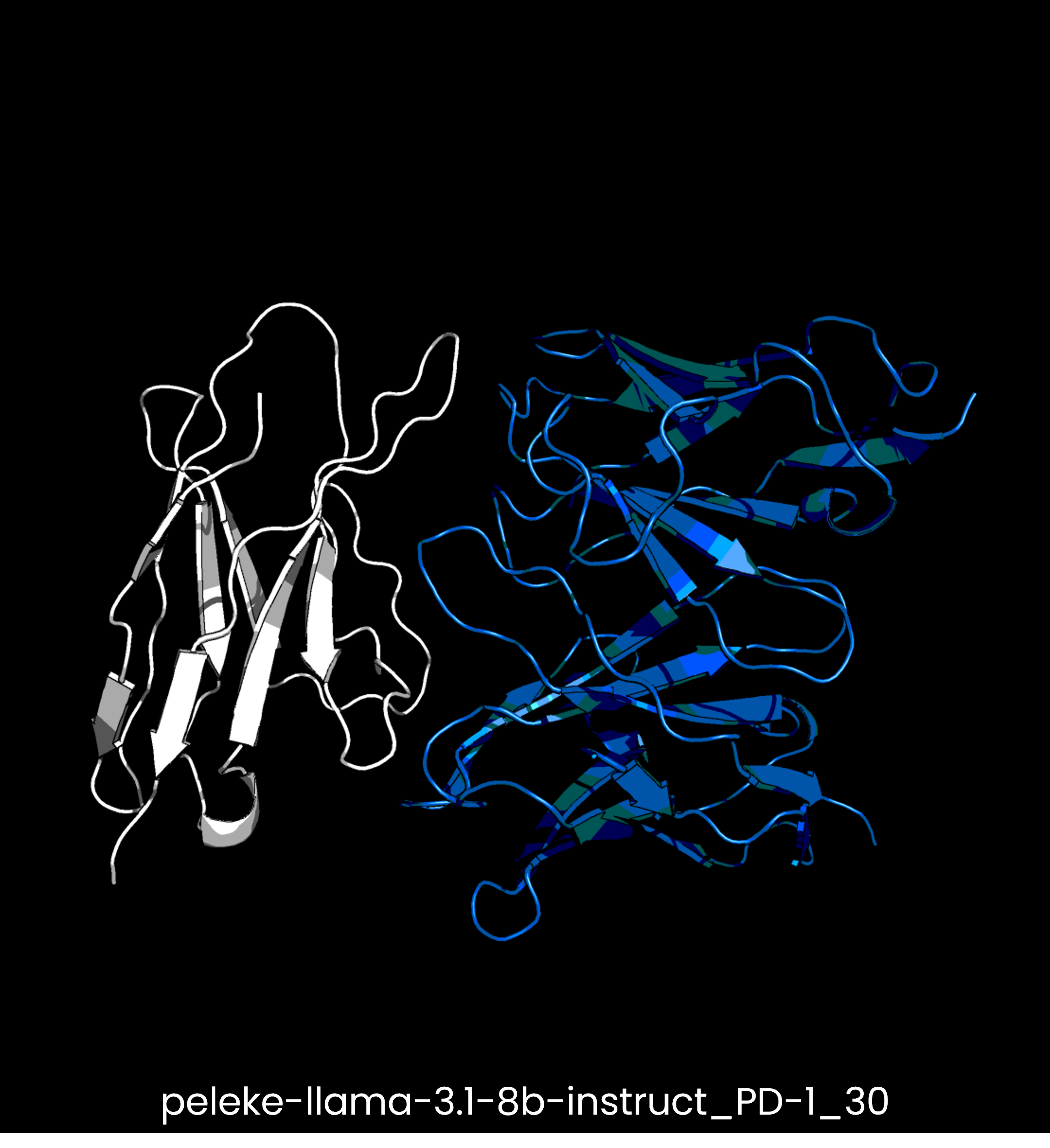

Llama 3.1 from Meta is a multilingual instruction-tuned generative model with 8B parameters. This model generates antibody sequences based on an instruction-style modality.

-

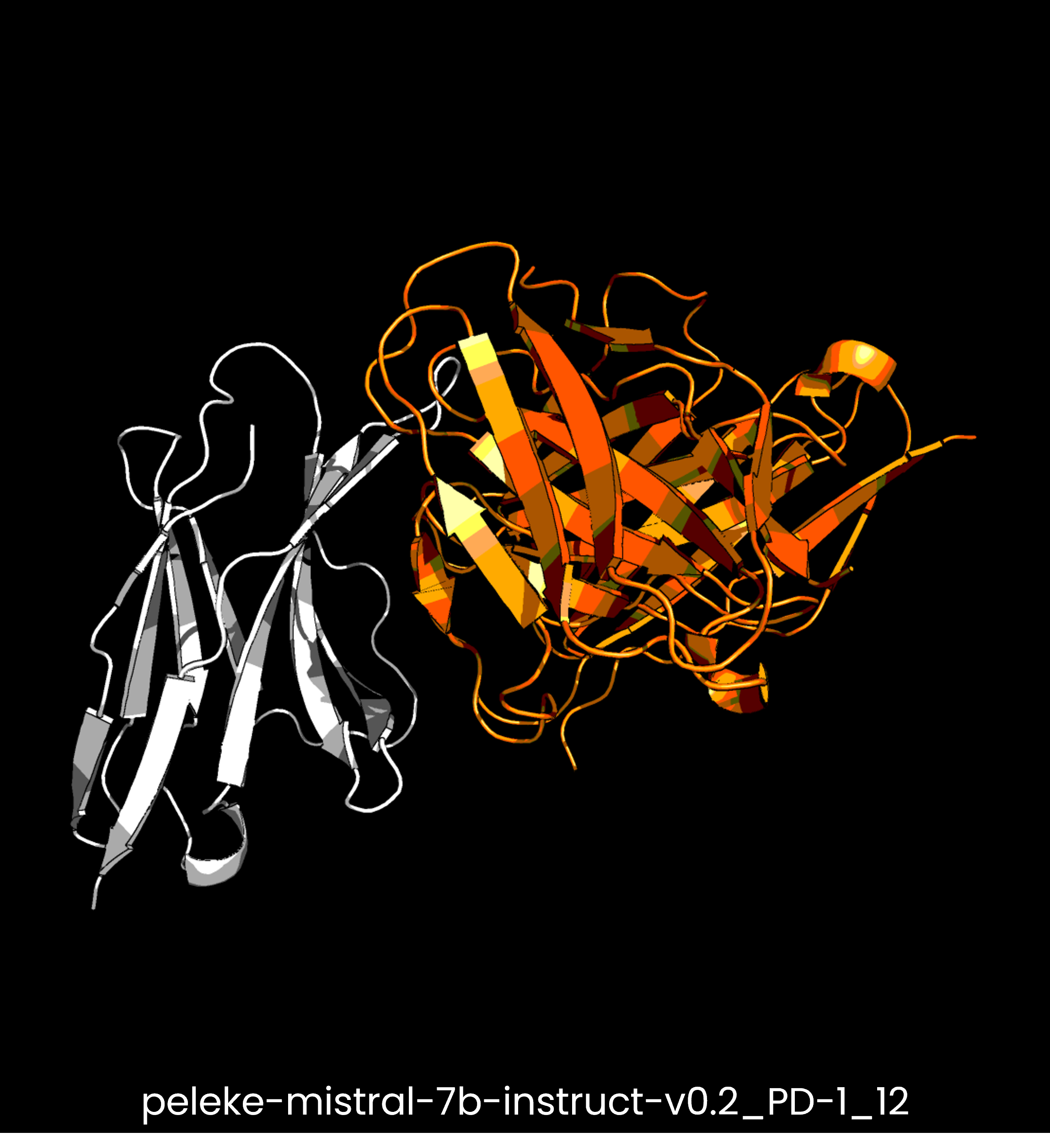

Mistral’s 7B Instruct v0.2 model is a powerful, instruction-tuned LLM known for its 32k token context windows, which work well for antibody generation.

How We Built peleke-1.

Data Curation

Using the Structural Antibody Database (SAbDab), we’ve curated a training dataset of over 9k antibody-antigen complex sequences and annotated the epitope residues.

Model Tuning

We’ve selected a series of state-of-the-art LLMs,and tuned them into ALMs (Antibody Language Models), which generate Fv sequences given an epitope-annotated antigen sequence input.

Output Evaluation

Generating letters is great, but our training approach also includes in silico validation of the generated amino acid sequences by folding and docking to the antigen.

How It Works.

Peleke models are designed to be easy to use with standard LLM tools and frameworks.

Simply provide an input antigen sequence with the desired epitope residues annotated, and the model will generate heavy and light chain Fv sequences to target the desired binding site.

Acknowledgments

We’d like to thank our funders and acknowledge the collaborative group of people UNC Charlotte, NC School of Science and Mathematics, and Tuple who made this project possible: Nicholas Santolla, Trey Pridgen, Prbhuv Nigam, and Colby T. Ford.

Personnel funding was provided by a NCBiotech Industrial Internship Program Grant #2025-IIP-0021.

Funding for Azure cloud GPUs was provided by the Microsoft Most Valuable Professionals program.